History of the Nuclear World, Part III

78 years ago, we tested the first Atomic Bomb. And then we used it.

Bomb Design

The two basic designs for atomic bombs developed at Los Alamos are still used today, though with refinements that increase their explosive yield and shrink their size.

In his introduction lectures, Robert Serber explained the basic problem that all bomb designers have to solve. Once the chain reaction begins, it takes about 80 generations of neutrons to fission a whole kilogram of material. This takes place in about 0.8 microseconds, or less than one millionth of one second. “While this is going on,” Serber said, “the energy release is making the material very hot, developing great pressure and hence tending to cause an explosion.”

That is a bit of an understatement. The quickly generated heat rises to about 10 million degrees Celsius. At this temperature the uranium is no longer a metal but has been converted into a gas under tremendous pressure, or plasma. The gas expands at great velocity, pushing the atoms further apart, increasing the time necessary for neutron collisions, and allowing more neutrons to escape without hitting any atoms. The material would thus blow apart before the weapon could achieve full explosive yield. When this happens in a poorly designed weapon it is called a “fizzle.” There is still an explosion, just smaller than designed and predicted.

Led by Robert Oppenheimer, the scientific teams developed two methods for achieving the desired mass and explosive yield. The first is the gun assembly technique, which rapidly brings together two subcritical masses to form the critical mass necessary to sustain a full chain reaction. The second is the implosion technique, which rapidly compresses a single subcritical mass into the critical density.

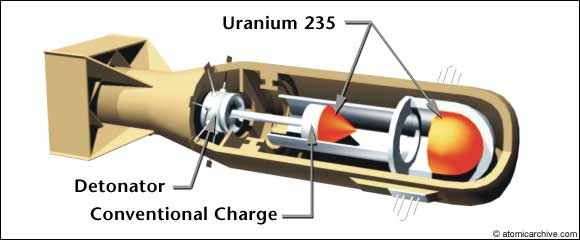

The Uranium Gun

The gun design is the least complex. It basically involves placing a subcritical amount of Uranium-235 at or around one end of a gun barrel and shooting a plug of U-235 into the assembly. To avoid a fizzle, the plug has to travel at a speed faster than that of the nuclear chain reaction, which works out to about 1,000 feet per second. The material is also surrounded by a “tamper” of uranium that helps reflect escaping neutrons back into the bomb core, thus reducing the amount of material needed to achieve a critical mass.

The nuclear weapon that the United States dropped on Hiroshima, Japan, on August 6, 1945, was a gun-type weapon. Called “Little Boy,” the gun barrel inside weighed about 1,000 pounds and was six feet long. The science was so well understood, even at that time, that it was used without being explosively tested beforehand.

Today, this is almost certainly the design that a terrorist group would try to duplicate if they could acquire enough highly enriched uranium. The Hiroshima bomb used 64 kilograms of U-235. Today, a similar bomb could be constructed with approximately 25 kilograms or less, with an assembled sphere about the size of a small melon.

The Problem with Plutonium

Gun-design weapons can use only uranium as a fissile material. The chain reaction in plutonium proceeds more rapidly than the plug can be accelerated, thus causing the device to explode prematurely.

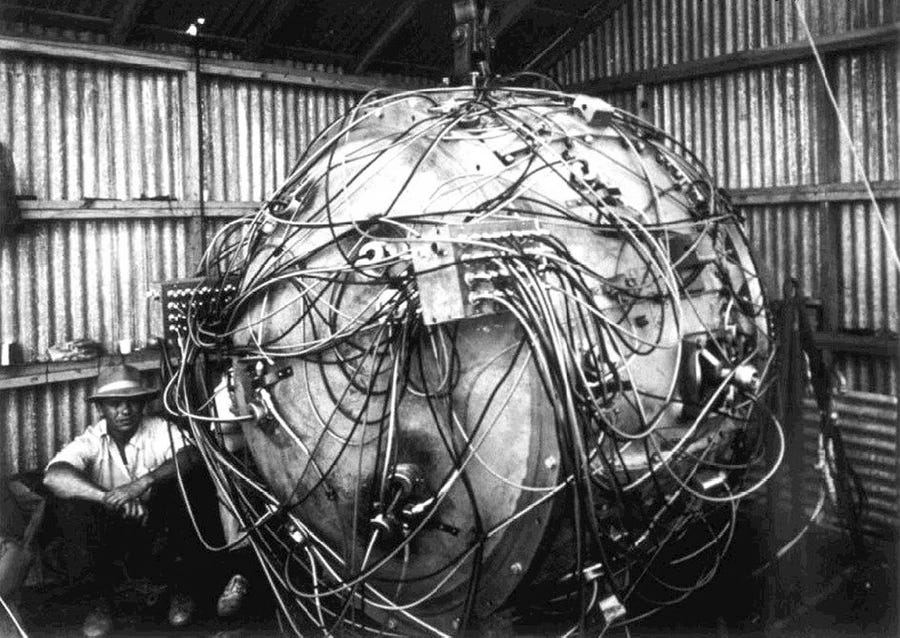

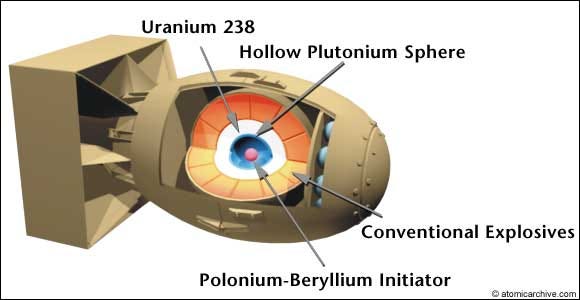

But plutonium can be used in another design that uniformly compresses the material to achieve critical mass (as can uranium). This is a more complex design but allows for a smaller device, such as those used in today’s modern missile warheads. The implosion design was used in the first nuclear explosion, the Trinity test at Alamogordo, New Mexico, on July 16, 1945, and in the “Fat Man” nuclear bomb dropped on Nagasaki, Japan, on August 9, 1945.

The implosion method of assembly involves a sphere of bomb material surrounded by a tamper layer and then a layer of carefully shaped plastic explosive charges. With exquisite microsecond timing, the explosives detonate, forming a uniform shock wave that compresses the material down to critical mass. It is sometimes compared to compressing a basketball into a baseball. The more accurate size would be compressing a grapefruit into a sphere the size of a golf ball.

A neutron emitter at the center of the device (usually a thin wafer of polonium that is squeezed together with a sheet of beryllium) starts the chain reaction. The Trinity test used about 6 kilograms of plutonium, but modern implosion devices use approximately 5 kilograms of plutonium or less—a sphere about the size of a plum.

By Spring 1945 the Los Alamos scientists were franticly rushing to assemble what they called the “Gadget” for the world’s first atomic test. Although they had spent years in calculation, the staggering 20-kiloton magnitude of the Trinity explosion surpassed expectations. Secretary of War Henry Stimson received word of the successful test while accompanying President Truman at the Potsdam Conference. At the close of the conference, Truman made a deliberately veiled comment to Stalin, alluding to a new U.S. weapon. The Soviet premier responded with an equally cryptic nod and “Thank you.” (By then, Stalin had his own spies at Los Alamos, Klause Fuchs and Ted Hall, and was well aware of the progress of the US project.)

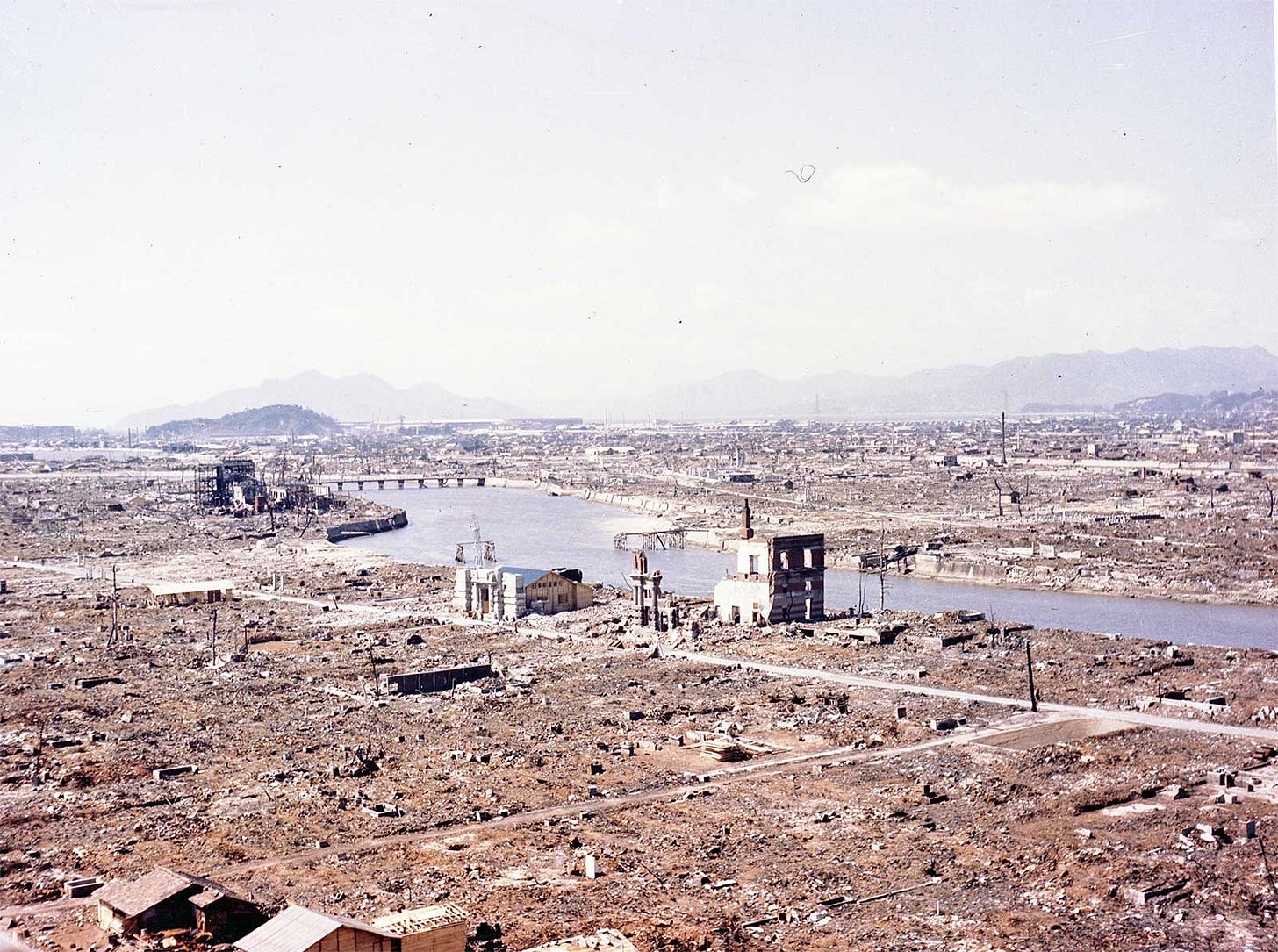

Back in the U.S. the wheels were in motion, and the first atomic bomb, “Little Boy,” was on a ship headed to Tinian, an island off the coast of Japan. In the months leading up to Trinity, top government officials had selected targets and formed a policy of use. The eight-member Interim Committee, responsible for A-bomb policy and chaired by Stimson, concluded that “we could not give the Japanese any warning; that we could not concentrate on a civilian area; but that we should seek to make a profound psychological impression on as many of the inhabitants as possible . . . [and] that the most desirable target would be a vital war plant employing a large number of workers and closely surrounded by workers’ houses.” On August 6, 1945, Little Boy exploded with a force of 15 kilotons over the first city on the target list, Hiroshima.

Dropping the Bomb

To this day, the decision to drop the bomb on Japan remains controversial and historians continue to dispute the bomb’s role in ending the Pacific war. The traditional view argues that Truman faced a hellish choice: use the bomb or subject U.S. soldiers to a costly land invasion. Officials at the time did not believe that Japan was on the verge of unconditional surrender, and the planned land invasion of the home islands would have resulted in extremely high casualties on both sides. The months preceding the atomic bombings had witnessed some of the most horrific battles of the war in the Pacific, with thousands of U.S. troops dying in island assaults. Historians Thomas B. Allen and Norman Polmar write:

Had the invasions occurred, they would have been the most savage battles of the war. Thousands of young U.S. military men and perhaps millions of Japanese soldiers and civilians would have died. Terror weapons could have scarred the land and made the end of the war an Armageddon even worse than the devastation caused by two atomic bombs.

Immediately after the bombing of Hiroshima and Nagasaki, there was significant moral backlash, expressed most poignantly in the writings of John Hersey, whose story of six Hiroshima residents on the day of the bombing shocked readers of The New Yorker in 1946.

But the debate was not over whether the bombing was truly necessary to end the war. It was not until the mid-1960s that an alternate interpretation sparked a historiographical dispute. In 1965, Gar Alperovitz argued in his book Atomic Diplomacy that the bomb was dropped primarily for political rather than military reasons.

In the summer of 1945, he says, Japan was on the verge of surrender. The entry of the Soviet Union into the war against Japan in August 1945 virtually guaranteed that Japan would surrender. Truman and his senior advisors knew this but used the atomic bomb to intimidate the Soviet Union and thus gain advantage in the postwar situation.

There is significant evidence for this interpretation. Kai Bird and Martin Sherwin, for example, recount in their Oppenheimer biography that Gen. Groves himself told physicist Joseph Rotblat over dinner at Los Alamos in March 1944, “You realize of course that the main purpose of this project is to subdue the Russians.” (American Prometheus, p.284)

Some proponents of this perspective have disagreed with Alperovitz on the primacy of the Soviet factor in A-bomb decision making, but have supported his conclusion that the bomb was seen by policy makers as a weapon with diplomatic leverage.

A middle-ground historical interpretation, convincingly argued by Barton Bernstein, suggests that ending the Pacific war was indeed Truman’s primary reason for dropping the bomb, but that policy makers saw the potential to impress the Soviets, and to end the war before Moscow could join an allied invasion, as a “bonus.”

This view is buttressed by compelling evidence that most senior officials did not see a big difference between killing civilians with fire bombs and killing them with atomic bombs. The war had brutalized everyone. The strategy of intentionally attacking civilian targets, considered beyond the pale at the beginning of the war, had become commonplace in both the European and Asian theaters. Gen. Curtis LeMay had already led firebombing attacks on sixty-three Japanese cities that killed over one million Japanese civilians.

Hiroshima and Nagasaki, in this context, were the continuation of decisions reached years earlier. The Japanese estimate that more than 210,000 were killed in the two bombings. The official US estimate after the war was 110,000, though that is almost certainly an undercount. (For a comprehensive analysis, see Alex Wellerstein, “Counting the Dead at Hiroshima and Nagasaki.”)

For many, this was not a difficult choice. And the wheels were in motion. The plans were devised and implemented by the military, like all previous bombing raids. Truman’s approval was not required. He never actually ordered the bombing, he just did not stop it. It was only after the bombings of Nagasaki that Truman intervened when he learned that a third city was to be destroyed. Close advisors said that for the President, the “thought of wiping out another 100,000 people was too horrible.” (Thus beginning the tradition of civilian control over atomic weapons.)

All during this process, the atomic scientists building the bomb had engaged in lengthy debates over the morality and consequences of dropping the bomb, particularly after it was clear that it was not needed to defeat Nazi Germany. But it was only after the bombings that the public and the political leaders began to comprehend the great danger the Manhattan Project had unleashed and began to draw a distinction between conventional weapons and nuclear weapons.

To be continued…..

This is the third of my five-part series on the science, history and politics of the beginning of the nuclear age, based on my book, Bomb Scare: The History and Future of Nuclear Weapons (Columbia University Press, 2008). I hope you will see the Oppenheimer film and read the other chapters in this story:

Thank you, both! What a dumb typo. Yes, the heat quickly rises to 10 million degrees Celsius, not 10 billion. I have corrected it in the text.

Good article. But is the number 10 billion degrees correct? Perhaps million is better?